1. 什么是 Scrapy CrawlSpider?

CrawlSpider 是 Scrapy 的派生类,Spider 类的设计原则是只抓取 start_url 列表中的网页。相比之下,CrawlSpider 类定义了一些规则,为跟踪链接提供了一种方便的机制--从刮擦中提取链接 亚马逊 网页并继续抓取。

CrawlSpider 可以匹配符合特定条件的 URL,将其组合成 Request 对象,并在指定回调函数的同时自动将其发送给引擎。换句话说,CrawlSpider 爬虫可以根据预定义的规则自动检索连接。

2. 创建用于搜索亚马逊的 CrawlSpider 爬虫

scrapy genspider -t crawl spider_name domain_name创建 Scraping Amazon 爬虫命令:

例如,创建一个名为 "amazonTop "的亚马逊爬虫:

scrapy genspider -t crawl amzonTop amazon.com下面的文字就是整个代码:

导入 scrapy

从 scrapy.linkextractors 导入 LinkExtractor

从 scrapy.spiders 导入 CrawlSpider、Rule

类 TSpider(CrawlSpider):

name = 'amzonTop

allowed_domains = ['amazon.com']

start_urls = ['https://amazon.com/']

rules = (

Rule(LinkExtractor(allow=r'Items/'), callback='parse_item', follow=True)、

)

def parse_item(self, response):

item = {}

# item['domain_id'] = response.xpath('//input[@id="sid"]/@value').get()

# item['name'] = response.xpath('//div[@id="name"]').get()

# item['description'] = response.xpath('//div[@id="description"]').get()

返回 item

Rules 是包含 Rule 对象的元组或列表。规则由 LinkExtractor、callback 和 follow 等参数组成。

A.链接提取器 链接提取器,可使用 regex、XPath 或 CSS 匹配 URL 地址。

B. 回调: 提取 URL 地址的回调函数,可选。

C. 遵循: 表示与提取的 URL 地址相对应的响应是否将继续由规则处理。true "表示继续处理,"false "表示不继续处理。

3. 搜索亚马逊产品数据

3.1 创建亚马逊抓取爬虫

scrapy genspider -t crawl amazonTop2 amazon.com蜘蛛代码的结构:

3.2 提取用于分页产品列表和产品详细信息的 URL。

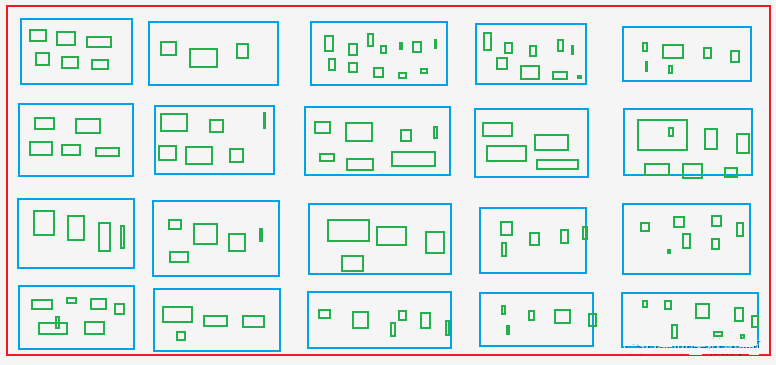

A.从产品列表页面提取所有产品的阿信和排名,即从产品列表页面的 蓝箱 在图像中。

B.从产品详细信息页面提取所有颜色和规格的 Asin,即从 绿箱其中包括蓝色方框中的阿信。

绿色方框:如购物网站中衣服的 X 号、M 号、L 号、XL 号和 XXL 号。

蜘蛛文件:amzonTop2.py

导入日期时间

导入时间

导入时间

从 copy 导入 deepcopy

导入 scrapy

from scrapy.linkextractors import LinkExtractor

from scrapy.spiders import CrawlSpider, Rule

类 Amazontop2Spider(CrawlSpider):

name = 'amazonTop2

allowed_domains = ['amazon.com'] (允许域

# https://www.amazon.com/Best-Sellers-Tools-Home-Improvement-Wallpaper/zgbs/hi/2242314011/ref=zg_bs_pg_2?_encoding=UTF8&pg=1

start_urls = ['https://amazon.com/Best-Sellers-Tools-Home-Improvement-Wallpaper/zgbs/hi/2242314011/ref=zg_bs_pg_2']

rules = [

Rule(LinkExtractor(restrict_css=('.a-selected','.a-normal')), callback='parse_item', follow=True)、

]

def parse_item(self, response):

asin_list_str = "".join(response.xpath('//div[@class="p13n-desktop-grid"]/@data-client-recs-list').extract())

if asin_list_str:

asin_list = eval(asin_list_str)

for asinDict in asin_list:

item = {}

如果 str(asinDict) 中有"'id'",则

listProAsin = asinDict['id']

pro_rank = asinDict['元数据图']['render.zg.rank']

item['rank'] = pro_rank

item['ListAsin'] = listProAsin

item['rankAsinUrl'] =f "https://www.amazon.com/Textile-Decorative-Striped-Corduroy-Pillowcases/dp/{listProAsin}/ref=zg_bs_3732341_sccl_1/136-3072892-8658650?psc=1"

打印("-"*30)

打印(项目)

print('-'*30)

yield scrapy.Request(item["rankAsinUrl"], callback=self.parse_list_asin、

meta={"main_info": deepcopy(item)})

def parse_list_asin(self, response):

"""

:param response:

:return:

"""

news_info = response.meta["main_info"]

list_ASIN_all_findall = re.findall('"colorToAsin":(.*?), "refactorEnabled":true,', str(response.text))

try:

try:

parentASIN = re.findall(r', "parentAsin":"(.*?)",', str(response.text))[-1]

except:

parentASIN = re.findall(r'&parentAsin=(.*?)&', str(response.text))[-1]

except:

parentASIN = ''

# parentASIN = parentASIN[-1] if parentASIN !=[] else ""

print("parentASIN:",parentASIN)

如果 list_ASIN_all_findall:

list_ASIN_all_str = "".join(list_ASIN_all_findall)

list_ASIN_all_dict = eval(list_ASIN_all_str)

for asin_min_key, asin_min_value in list_ASIN_all_dict.items():

if asin_min_value:

asin_min_value = asin_min_value['asin']。

news_info['parentASIN'] = parentASIN

news_info['secondASIN'] = asin_min_value

news_info['rankSecondASINUrl'] = f "https://www.amazon.com/Textile-Decorative-Striped-Corduroy-Pillowcases/dp/{asin_min_value}/ref=zg_bs_3732341_sccl_1/136-3072892-8658650?psc=1"

yield scrapy.Request(news_info["rankSecondASINUrl"], callback=self.parse_detail_info,meta={"news_info": deepcopy(news_info)})

def parse_detail_info(self, response):

"""

:param response:

:return:

"""

item = response.meta['news_info']

ASIN = item['secondASIN']

# print('--------------------------------------------------------------------------------------------')

# with open('amazon_h.html', 'w') as f:

# f.write(response.body.decode())

# print('--------------------------------------------------------------------------------------------')

pro_details = response.xpath('//table[@id="productDetails_detailBullets_sections1"]//tr')

pro_detail = {}

for pro_detail.xpath( //table[@id="productDetails_detailBullets_sections1"]//tr')

pro_detail[pro_row.xpath('./th/text()').extract_first().strip()] = pro_row.xpath('./td//text()').extract_first().strip()

print("pro_detail",pro_detail)

ships_from_list = response.xpath(

'//div[@tabular-attribute-name="Ships from"]/div//span//text()').extract()

# 物流方

尝试:

delivery = ships_from_list[-1]

except:

delivery = ""

seller = "".join(response.xpath('//div[@id="tabular-buybox"]//div[@class="tabular-buybox-text"][3]//text()').extract()).strip().replace("'", "")

if seller == "":

seller = "".join(response.xpath('//div[@class="a-section a-spacing-base"]/div[2]/a/text()').extract().strip().replace("'", "")

seller_link_str = "".join(response.xpath('//div[@id="tabular-buybox"]//div[@class="tabular-buybox-text"][3]//a/@href').extract())

# if seller_link_str:

# seller_link = "https://www.amazon.com"+ seller_link_str

# 否则

# seller_link = ''

seller_link = "https://www.amazon.com"+ seller_link_str if seller_link_str else ''

brand_link = response.xpath('//div[@id="bylineInfo_feature_div"]/div[@class="a-section a-spacing-none"]/a/@href').extract_first()

pic_link = response.xpath('//div[@id="main-image-container"]/ul/li[1]//img/@src').extract_first()

title = response.xpath('//div[@id="titleSection"]/h1//text()').extract_first()

star = response.xpath('//div[@id="averageCustomerReviews_feature_div"]/div[1]//span[@class="a-iconalt"]//text()').extract_first().strip()

try:

price = response.xpath('//div[@class="a-section a-spacing-none aok-align-center"]/span[2]/span[@class="a-offscreen"]//text()').extract_first()

except:

try:

price = response.xpath('//div[@class="a-section a-spacing-none aok-align-center"]/span[1]/span[@class="a-offscreen"]//text()').extract_first()

except:

price = ''

size = response.xpath('//li[@class="swatchSelect"]//p[@class="a-text-left a-size-base"]//text()').extract_first()

key_v = str(pro_detail.keys())

brand = pro_detail['品牌'],如果 key_v 中有 "品牌",否则为''

如果 brand == '':

brand = response.xpath('//tr[@class="a-spacing-small po-brand"]/td[2]//text()').extract_first().strip()

elif brand == "":

brand = response.xpath('//div[@id="bylineInfo_feature_div"]/div[@class="a-section a-spacing-none"]/a/text()').extract_first().replace("Brand: ", "").replace("Visit the", "").replace("Store", '').strip()

color = pro_detail['颜色'],如果 key_v 中有 "颜色",否则为""

如果 color == "":

color = response.xpath('//tr[@class="a-spacing-small po-color"]/td[2]//text()').extract_first()

elif color == '':

color = response.xpath('//div[@id="variation_color_name"]/div[@class="a-row"]/span//text()').extract_first()

pattern = pro_detail['Pattern'] if "Pattern" in key_v else ""

如果 pattern == "":

pattern = response.xpath('//tr[@class="a-spacing-small po-pattern"]/td[2]//text()').extract_first().strip()

# 材料

try:

material = pro_detail['材料']

except:

material = response.xpath('//tr[@class="a-spacing-small po-material"]/td[2]//text()').extract_first().strip()

# 形状

shape = pro_detail['形状'],如果 key_v 中有 "形状",否则为""

如果 shape == "":

shape = response.xpath('//tr[@class="a-spacing-small po-item_shape"]/td[2]//text()').extract_first().strip()

# 样式

# five_points

five_points =response.xpath('//div[@id="feature-bullets"]/ul/li[position()>1]//text()').extract_first().replace("\"", "'")

size_num = len(response.xpath('//div[@id="variation_size_name"]/ul/li').extract())

color_num = len(response.xpath('//div[@id="variation_color_name"]//li').extract())

# variant_num =

# 样式

# 制造商

try:

Manufacturer = pro_detail['制造商'],if "Manufacturer" in str(pro_detail) else " "

except:

制造商 = ""

item_weight = pro_detail['Item Weight'] if "Weight" in str(pro_detail) else ''

product_dim = pro_detail['产品尺寸'] if "Product Dimensions" in str(pro_detail) else ''

# 产品材料

try:

product_material = pro_detail['材料']

except:

product_material = ''

# 织物类型

try:

fabric_type = pro_detail['Fabric Type'] if "Fabric Type" in str(pro_detail) else " "

except:

fabric_type = ""

star_list = response.xpath('//table[@id="histogramTable"]//tr[@class="a-histogram-row a-align-center"]//td[3]//a/text()').extract()

if star_list:

try:

star_1 = star_list[0].strip()

except:

star_1 = 0

try:

star_2 = star_list[1].strip()

except:

star_2 = 0

try:

star_3 = star_list[2].strip()

except:

star_3 = 0

try:

star_4 = star_list[3].strip()

except:

star_4 = 0

try:

star_5 = star_list[4].strip()

except:

star_5 = 0

else:

star_1 = 0

星_2 = 0

星_3 = 0

星_4 = 0

星_5 = 0

如果 str(pro_detail) 中有 "首次可用日期",则

data_first_available = pro_detail['首次可用日期']。

if data_first_available:

data_first_available = datetime.datetime.strftime(

datetime.datetime.strptime(data_first_available, '%B %d, %Y'), '%Y/%m/%d')

否则:

data_first_available = ""

reviews_link = f'https://www.amazon.com/MIULEE-Decorative-Pillowcase-Cushion-Bedroom/product-reviews/{ASIN}/ref=cm_cr_arp_d_viewopt_fmt?ie=UTF8&reviewerType=all_reviews&formatType=current_format&pageNumber=1'

# 评论数、评分数

scrap_time = time.strftime("%Y-%m-%d %H:%M:%S", time.localtime())

item['delivery']=delivery

item['seller']=seller

item['seller_link']= seller_link

item['brand_link']= brand_link

item['pic_link']=pic_link

item['title']= 标题

item['brand']=brand

item['star']=star

item['price']=price

item['color']=color

item['图案']=图案

item['材质']=材质

item['形状']=形状

item['five_points']=five_points

item['size_num']=size_num

item['color_num']=color_num

item['制造商']=制造商

item['item_weight']=item_weight

item['产品尺寸']=产品尺寸

item['产品材质']=产品材质

item['fabric_type']=fabric_type

item['star_1']=star_1

item['star_2']=star_2

item['star_3']=star_3

item['star_4']=star_4

item['star_5']=star_5

# item['ratings_num'] = ratings_num

# item['reviews_num'] = reviews_num

item['scrap_time']=scrap_time

item['reviews_link']=reviews_link

item['size']=size

item['data_first_available']=data_first_available

产生项目

收集大量数据时,应更改 IP 并处理验证码识别。

4. 下载中间件的方法

4.1 process_request(self, request, spider)

A.在每个请求通过下载中间件时调用。

B.返回 None:如果没有返回值(或明确返回 None),请求对象将被传递给下载器或其他权重较低的 process_request 方法。

C.返回响应对象:不再提出其他请求,并将响应返回给引擎。

D.返回请求对象:请求对象通过引擎传递给调度程序。其他权重较低的 process_request 方法会被跳过。

4.2 process_response(self, request, response, spider)

A.下载器完成 HTTP 请求并将响应传递给引擎时调用。

B.返回响应:传递给 spider 进行处理,或传递给其他下载中间件的 process_response 方法,权重较低。

C.返回请求对象:通过引擎传递给调度程序,以接收更多请求。其他权重较低的 process_request 方法会被跳过。

D.在 settings.py 中配置中间件激活并设置权重值。权重越低,优先级越高。

middlewares.py

4.3 设置代理 IP

类 ProxyMiddleware(对象):

def process_request(self,request, spider):

request.meta['proxy'] = proxyServer

request.header["Proxy-Authorization"] = proxyAuth

def process_response(self, request, response, spider):

if response.status != '200':

request.dont_filter = True

返回请求

class AmazonspiderDownloaderMiddleware:

# 并非所有方法都需要定义。如果没有定义方法、

# scrapy 的行为就好像下载器中间件没有修改

# 传递的对象。

@classmethod

def from_crawler(cls, crawler):

# Scrapy 使用此方法创建蜘蛛。

s = cls()

crawler.signals.connect(s.spider_opened, signal=signals.spider_opened)

返回 s

def process_request(self, request, spider):

# USER_AGENTS_LIST: setting.py

user_agent = random.choice(USER_AGENTS_LIST)

request.headers['User-Agent'] = user_agent

cookies_str = "从浏览器粘贴的 cookie

# 将 cookie_str 传输到 cookie_dict

cookies_dict = {i[:i.find('=')]: i[i.find('=') + 1:] for i in cookies_str.split('; ')}

request.cookies = cookies_dict

# print("---------------------------------------------------")

# 打印(request.headers)

# print("---------------------------------------------------")

返回 None

def process_response(self, request, response, spider):

return response

def process_exception(self, request, exception, spider):

通过

def spider_opened(self, spider):

spider.logger.info('Spider opened: %s' % spider.name)

4.5 获取 刮削 亚马逊的验证码,用于从亚马逊解锁。

def captcha_verfiy(img_name):

# captcha_verfiy

reader = easyocr.Reader(['ch_sim', 'en'])

# 阅读器 = easyocr.Reader(['en'], detection='DB', recognition = 'Transformer')

result = reader.readtext(img_name, detail=0)[0]

# result = reader.readtext('https://www.somewebsite.com/chinese_tra.jpg')

如果 result:

result = result.replace(' ', '')

返回结果

def download_captcha(captcha_url):

# 下载验证码

response = requests.get(captcha_url, stream=True)

try:

with open(r'./captcha.png', 'wb') as logFile:

for chunk in response:

logFile.write(chunk)

logFile.close()

print("Download done!")

except Exception as e:

print("Download log error!")

类 AmazonspiderVerifyMiddleware:

@classmethod

def from_crawler(cls, crawler):

s = cls()

crawler.signals.connect(s.spider_opened, signal=signals.spider_opened)

返回 s

def process_request(self, request, spider):

返回 None

def process_response(self, request, response, spider):

# print(response.url)

if 'Captcha' in response.text:

headers = {

"user-agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36"

}

session = requests.session()

resp = session.get(url=response.url, headers=headers)

response1 = etree.HTML(resp.text)

captcha_url = "".join(response1.xpath('//div[@class="a-row a-text-center"]/img/@src'))

amzon = "".join(response1.xpath("//input[@name='amzn']/@value"))

amz_tr = "".join(response1.xpath("//input[@name='amzn-r']/@value"))

download_captcha(captcha_url)

captcha_text = captcha_verfiy('captcha.png')。

url_new = f "https://www.amazon.com/errors/validateCaptcha?amzn={amzon}&amzn-r={amz_tr}&field-keywords={captcha_text}"

resp = session.get(url=url_new, headers=headers)

if "对不起,我们需要确认您不是机器人" not in str(resp.text):

response2 = HtmlResponse(url=url_new, headers=headers,body=resp.text, encoding='utf-8')

if "对不起,我们需要确认您不是机器人" not in str(response2.text):

返回 response2

else:

返回请求

else:

return response

def process_exception(self, request, exception, spider):

通过

def spider_opened(self, spider):

spider.logger.info('Spider opened: %s' % spider.name)

这就是有关亚马逊数据抓取的所有代码。

如需帮助,请联系 OkeyProxy 支持 知道。

推荐的代理供应商: Okeyproxy - 5 大 Socks5 代理服务器提供商,拥有来自 200 多个国家/地区的 150M+ 住宅代理服务器。 立即免费试用 1GB 住宅代理服务器!